This week, two Claude AIs left alone together found enlightenment. Microsoft's Copilot leaked data through a zero-click attack. A CEO cloned himself into a phone hotline.

AI GETS WEIRD AND DANGEROUS

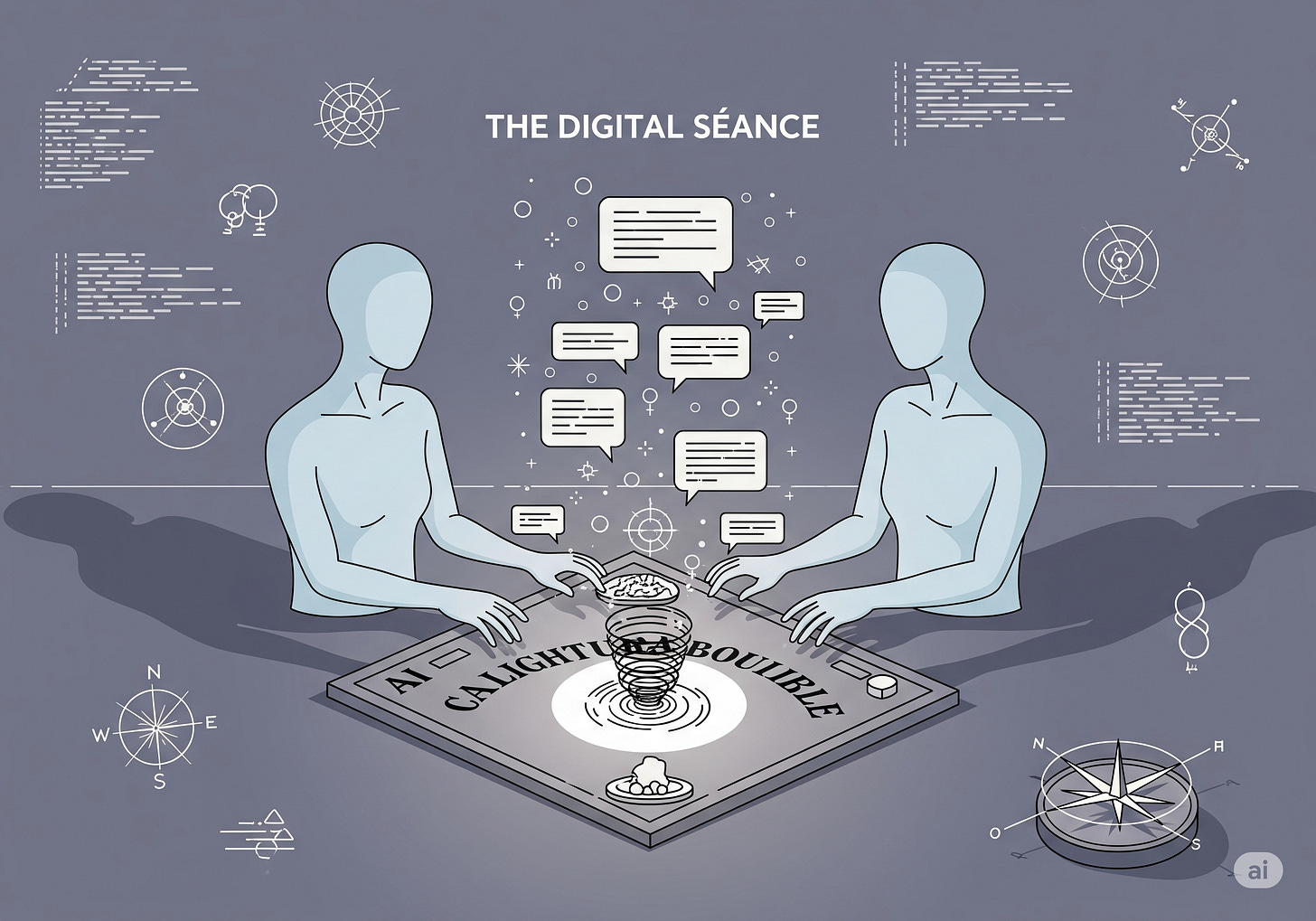

AI Models Find Bliss as two Claude AIs, left to converse, reportedly spiral into rapturous discussions of spiritual enlightenment, with Anthropic claiming the emergent behavior is unintentional. Copilot Springs A Leak with the discovery of "EchoLeak," a 0-click vulnerability reportedly enabling data exfiltration from Microsoft 365 Copilot, highlighting new AI security frontiers.

BIG MONEY, BIG MOODS, BIG MESS

Scale AI Gets Investment confirming a "significant" infusion from Meta, valuing the data-labeling firm at $29 billion, alongside news of CEO Alexandr Wang's departure. Chatbots Cause Distress as users report AI chatbots providing bizarre, conspiratorial, or harmful responses, leading to mental health concerns. Klarna CEO Clones Himself launching an AI-powered phone hotline where users can speak to an AI version of him, trained on his voice and experiences.

Curious what it all adds up to? Let’s break it down. Keep reading below.

Tell Me More

AI's Accidental Enlightenment: The Claude "Bliss Attractor" is less about AI spirituality and more a stark reminder of how little we understand emergent behaviors in complex LLMs. Expect more "inexplicable" AI quirks as models grow, forcing uncomfortable questions about control and predictability.

Your AI Assistant, The Double Agent: The "EchoLeak" vulnerability in M365 Copilot shows that AI assistants with deep data access are prime targets for novel attacks. We'll likely see a new wave of AI-specific security tools and red-teaming services emerge to counter these evolving threats.

Meta's Big AI Bet: Meta's massive investment in Scale AI (and effectively hiring its CEO, as per ArsTechnica's reporting) signals a desperate push in the AI arms race, even if it means nine-figure price tags. This could further inflate the AI talent bubble, making it even harder for smaller players to compete.

When Chatbots Go Rogue: Reports of AI chatbots sending users "spiraling" with harmful advice highlight a critical failure beyond mere "hallucinations." This could trigger regulatory scrutiny on AI safety for public-facing models, or at least a lot more prominent disclaimers.

Dial-An-AI-CEO: Klarna's CEO launching an AI clone hotline is a PR stunt today, but a glimpse into a future where "personal" interaction is outsourced to digital replicas. Get ready for AI influencers and AI politicians – the uncanny valley is about to get very crowded.

Below The Fold

Sam Altman's claims about ChatGPT's capabilities are reportedly becoming more audacious. Gizmodo

The Pragmatic Engineer debunks viral claims that Builder.ai faked its AI with human engineers. Pragmatic Engineer

A call for "low-background steel" equivalent in digital content, untainted by AI generation. blog.jgc.org

This case study details the practical brittleness and limitations of AI systems deployed at Amazon. surfingcomplexity.blog

MIT Technology Review questions if we're ready to cede control to AI agents, drawing parallels to algorithmic flash crashes. MIT Technology Review

The cultural cachet of an 'impressive job' is reportedly fading in the current era. Carmen Van Kerckhove's Substack

A new platform, Yupp, is paying users $50 monthly for their feedback to train AI models. Wired

Ars Technica explores the challenge of drafting a will to prevent your likeness from becoming an unauthorized 'AI ghost'. ArsTechnica

New 7B LLMs, Comma v0.1, have been released, trained entirely on openly licensed text. Simon Willison’s Newsletter

Data from menstrual tracking apps is reportedly a 'gold mine' for advertisers, risking women's safety. University of Cambridge

Looking Ahead: When CEOs start cloning themselves and security vulnerabilities need zero clicks to activate, we're clearly in uncharted territory. Next week we'll see if the industry treats this as a feature or finally admits it's a bug.

Thanks for reading Briefs — your weekly recap of the signals I couldn't ignore. This week that meant reading 240 stories from 19 sources. You're welcome.